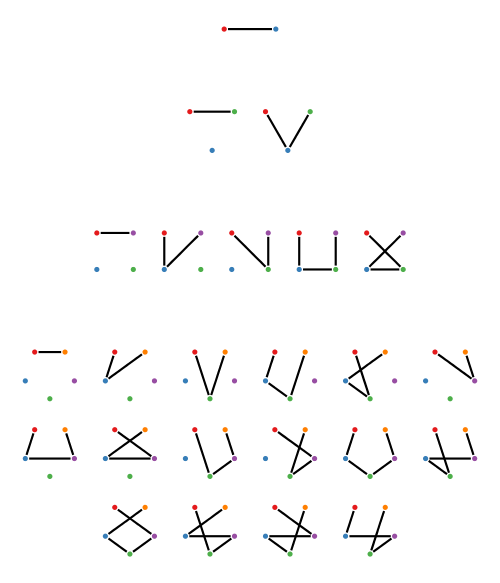

My previous post showed four rows of diagrams, where the th row (counting from zero) has diagrams with

dots. The diagrams in the

th row depict all possible paths that

- start at the top left dot,

- end at the top right dot, and

- never visit any dot more than once.

For a given diagram, we can choose which dots to visit (representing a subset of the dots), and we can visit the chosen dots in any order (a permutation). I will call the resulting things “subset permutations”, although I don’t know if there is some other more accepted term for them.

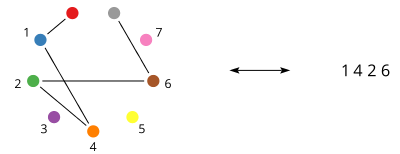

It’s not necessary to draw subset permutations as paths; it’s just one nice visual representation. If we number the dots from (excluding the top two, which are uninteresting), each path corresponds precisely to a permutation of some subset of

. Like this:

I thought of this idea the other day—of counting the number of “subset permutations”—and wondered how many there are of each size. I half expected it to turn out to be a sequence of numbers that I already knew well, like the Catalan numbers or certain binomial coefficients or something like that. So I was surprised when it turned out to be a sequence of numbers I don’t think I have ever encountered before (although it has certainly been studied by others).

So, how many subset permutations are there of size ? Let’s call this number

. From my previous post we can see that starting with

, the first few values are

. (When I saw 1, 2, 5, I thought sure it was going to be the Catalan numbers—but then the next number was 16 instead of 14…) Can we compute these more generally without just listing them all and counting?

Well, to choose a particular subset permutation of size , we can first choose how big we want the subset to be, i.e. how many dots to visit, which could be zero, or

, or anything in between. Once we choose to visit

out of

dots, then we have

choices for which dot to visit first, then

choices for which dot to visit second, all the way down to

choices for the last dot (you should convince yourself that

, not

, is correct!). This is often notated

So, in total then, is the sum of

over all possible

, that is,

This is already better than just listing all the subset permutations and counting. For example, we can compute that

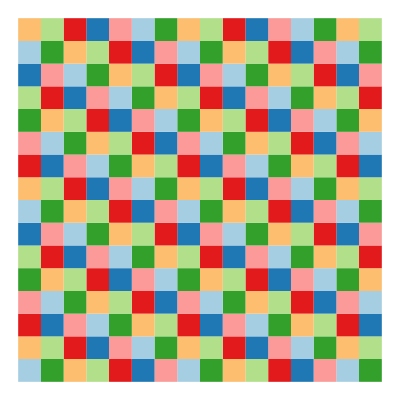

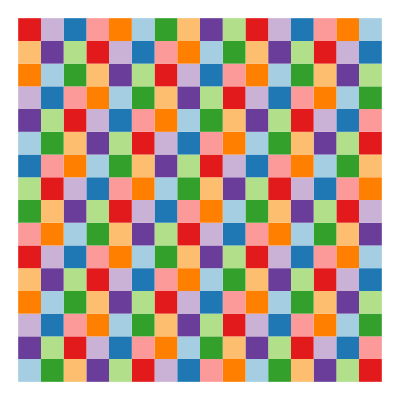

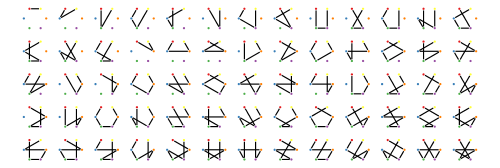

And sure enough, if we list them all, we get 65 (you’ll just have to take my word that this is all of them, I suppose!):

However, with a little algebra, we can do even better! First, let’s write out the sum for explicitly:

If we factor out of all the terms after the initial

, we get

so the subset permutation numbers actually follow a very simple recurrence! To get the th subset permutation number, we just multiply the previous one by

and add one.

and sure enough, . We can now easily calculate the next few subset permutation numbers:

A commenter also wondered about the particular order in which I listed subset permutations in my previous post. The short, somewhat disappointing answer is “whatever order came out of the standard library functions I used”. However, there are definitely more interesting things to say about the ordering, and I think I’ll probably write about that in another post.